I started by downloading the latest version of AFL (2.52b) and compiling it. This went as smoothly as one could hope for and I experienced no issues although having done this several times before probably helps.

libnsbmp

I started with libnsbmp which is used to render windows bmp and ico files which remains a very popular format for website Favicons. The library was built with AFL instrumentation enabled, some output directories were created for results and a main and four subordinate fuzzer instances started.vince@workshop:libnsbmp$ LD=afl-gcc CC=afl-gcc AFL_HARDEN=1 make VARIANT=debug test afl-cc 2.52b by <lcamtuf@google.com> afl-cc 2.52b by <lcamtuf@google.com> afl-cc 2.52b by <lcamtuf@google.com> COMPILE: src/libnsbmp.c afl-cc 2.52b by <lcamtuf@google.com> afl-as 2.52b by <lcamtuf@google.com> [+] Instrumented 633 locations (64-bit, hardened mode, ratio 100%). AR: build-x86_64-linux-gnu-x86_64-linux-gnu-debug-lib-static/libnsbmp.a COMPILE: test/decode_bmp.c afl-cc 2.52b by <lcamtuf@google.com> afl-as 2.52b by <lcamtuf@google.com> [+] Instrumented 57 locations (64-bit, hardened mode, ratio 100%). LINK: build-x86_64-linux-gnu-x86_64-linux-gnu-debug-lib-static/test_decode_bmp afl-cc 2.52b by <lcamtuf@google.com> COMPILE: test/decode_ico.c afl-cc 2.52b by <lcamtuf@google.com> afl-as 2.52b by <lcamtuf@google.com> [+] Instrumented 71 locations (64-bit, hardened mode, ratio 100%). LINK: build-x86_64-linux-gnu-x86_64-linux-gnu-debug-lib-static/test_decode_ico afl-cc 2.52b by <lcamtuf@google.com> Test bitmap decode Tests:1053 Pass:1053 Error:0 Test icon decode Tests:609 Pass:609 Error:0 TEST: Testing complete vince@workshop:libnsbmp$ mkdir findings_dir graph_output_dir vince@workshop:libnsbmp$ afl-fuzz -i test/ns-afl-bmp/ -o findings_dir/ -S f02 ./build-x86_64-linux-gnu-x86_64-linux-gnu-debug-lib-static/test_decode_bmp @@ /dev/null > findings_dir/f02.log >&1 & vince@workshop:libnsbmp$ afl-fuzz -i test/ns-afl-bmp/ -o findings_dir/ -S f03 ./build-x86_64-linux-gnu-x86_64-linux-gnu-debug-lib-static/test_decode_bmp @@ /dev/null > findings_dir/f03.log >&1 & vince@workshop:libnsbmp$ afl-fuzz -i test/ns-afl-bmp/ -o findings_dir/ -S f04 ./build-x86_64-linux-gnu-x86_64-linux-gnu-debug-lib-static/test_decode_bmp @@ /dev/null > findings_dir/f04.log >&1 & vince@workshop:libnsbmp$ afl-fuzz -i test/ns-afl-bmp/ -o findings_dir/ -S f05 ./build-x86_64-linux-gnu-x86_64-linux-gnu-debug-lib-static/test_decode_bmp @@ /dev/null > findings_dir/f05.log >&1 & vince@workshop:libnsbmp$ afl-fuzz -i test/ns-afl-bmp/ -o findings_dir/ -M f01 ./build-x86_64-linux-gnu-x86_64-linux-gnu-debug-lib-static/test_decode_bmp @@ /dev/null

executions per second. This might be improved with better cooling but I have not investigated this.

After five days and six hours the "cycle count" field on the master instance had changed to green which the AFL documentation suggests means the fuzzer is unlikely to discover anything new so the run was stopped.

Just before stopping the afl-whatsup tool was used to examine the state of all the running instances.

vince@workshop:libnsbmp$ afl-whatsup -s ./findings_dir/ status check tool for afl-fuzz by <lcamtuf@google.com> Summary stats ============= Fuzzers alive : 5 Total run time : 26 days, 5 hours Total execs : 2873 million Cumulative speed : 6335 execs/sec Pending paths : 0 faves, 0 total Pending per fuzzer : 0 faves, 0 total (on average) Crashes found : 0 locally unique

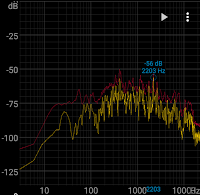

Just for completeness there is also the graph of how the fuzzer performed over the run.

There were no crashes at all (and none have been detected through fuzzing since the original run) and the 78 reported hangs were checked and all actually decode in a reasonable time. It seems the fuzzer "hang" detection default is simply a little aggressive for larger images.

libnsgif

I went through a similar setup with libnsgif which is used to render the GIF image format. The run was performed on a similar system running for five days and eighteen hours. The outcome was similar to libnsbmp with no hangs or crashes.vince@workshop:libnsgif$ afl-whatsup -s ./findings_dir/ status check tool for afl-fuzz by <lcamtuf@google.com> Summary stats ============= Fuzzers alive : 5 Total run time : 28 days, 20 hours Total execs : 7710 million Cumulative speed : 15474 execs/sec Pending paths : 0 faves, 0 total Pending per fuzzer : 0 faves, 0 total (on average) Crashes found : 0 locally unique

libsvgtiny

I then ran the fuzzer on the SVG render library using a dictionary to help the fuzzer cope with a sparse textural input format. The run was allowed to continue for almost fourteen days with no crashes or hangs detected.In an ideal situation this run would have been allowed to continue but the system running it required a restart for maintenance.

Conclusion

The aphorism "absence of proof is not proof of absence" seems to apply to these results. While the new fuzzing runs revealed no additional failures it does not mean there are no defects in the code to find. All I can really say is that the AFL tool was unable to find any failures within the time available.Additionally the AFL test corpus produced did not significantly change the code coverage metrics so the existing set was retained.

Will I spend the time again in future to re-run these tests? perhaps, but I think more would be gained from enabling the fuzzing of the other NetSurf libraries and picking the low hanging fruit from there than expending thousands of hours preforming these runs again.